Till now, we have introduced several case studies with neuroscience and psychology research articles about how to deepen those researches related to education, marketing, healthcare, and gaming fields by combining human physiological data such as electroencephalography (EEG) and pupil information with VR. It is not only about broadening the realm of Brain-computer interface, but the core value inside is enriching human life through a better understanding of physiological signals. Ranging from creating adaptive educational contents to encourage students’ full engagement up to using neurofeedback therapy for patients with PTSD or ADHD as well as physiological data-driven in-person marketing widely used in game industry, the applicability of neuroscience to education, marketing, medical science and more has already been proved. However, this is not the end. Recently, the validity of combining EEG with VR in studying language processing in naturalistic environments has been confirmed.

The importance of building contextually-rich realistic environments

As we might all know intuitively through everyday communication, the context plays a crucial role in language processing. Besides, visual cues along with auditory stimuli significantly help our brains process meaningful information during any kinds of human to human interaction. Consequently, realistic models of language comprehension should be developed to understand language processing in contextually rich environments. Nevertheless, researchers in this field have suffered designing their research environments; it is picky to set up a naturalistic environment that resembles our everyday life settings and gives enough control to both linguistic and non-linguistic information no matter how much the situation is contextually-rich. Anyone who has tackled the issue should pay attention to this article because the combination of VR and EEG could be the solution. This week’s review is about “The combined use of virtual reality and EEG to study language processing in naturalistic environments.” By combining VR and EEG, strictly controlled experiments in a more naturalistic environment would be comfortably in your hands to get an explicit understanding of how we process language.

VR to enhance reality level in your experiment

To start with, why should be VR utilized to design your experiment? As well defined in many sources, the virtual environment is a space where people can have identical sensory experiences just as in the real world, and where the users’ every action can be tracked in real time. Accordingly, what the strongest point VR fundamentally has is to allow researchers to achieve an increased level of validity in a study while simultaneously having full experimental control. EEG combined with VR would, therefore, make it possible to correlate humans’ physiological signals with their every single movement in the designed environment. Thus, the successful combination of the two has been used to study users’ driving behavior, spatial navigation, spatial presence and more.

Why not extend this kind of methodology further into studying language processing? Maybe some of you might doubt if human’s natural behavior can be well examined in a virtual environment. Since every line of a conversation in VR is an artificial voice, it might be hard for people to get fully engaged in the interaction inside VR. In other words, there exist some skeptical views that Human-Computer Interaction (HCI) and Human-Human Interaction (HHI) are different so that VR is only adaptable when studying HCI. However, it was turned out to be a meaningless worry. The study by Heyselaar, Hagoort, and Segaert (2017) proved in their experiment that the way people adapt their speech rate and pitch to an interlocutor has no difference whether it is a virtual one or a human. This significantly implies that it is plausible enough to observe language processing in a virtual environment to understand the one in our real life.

N400 response to be well observed in the VR setting

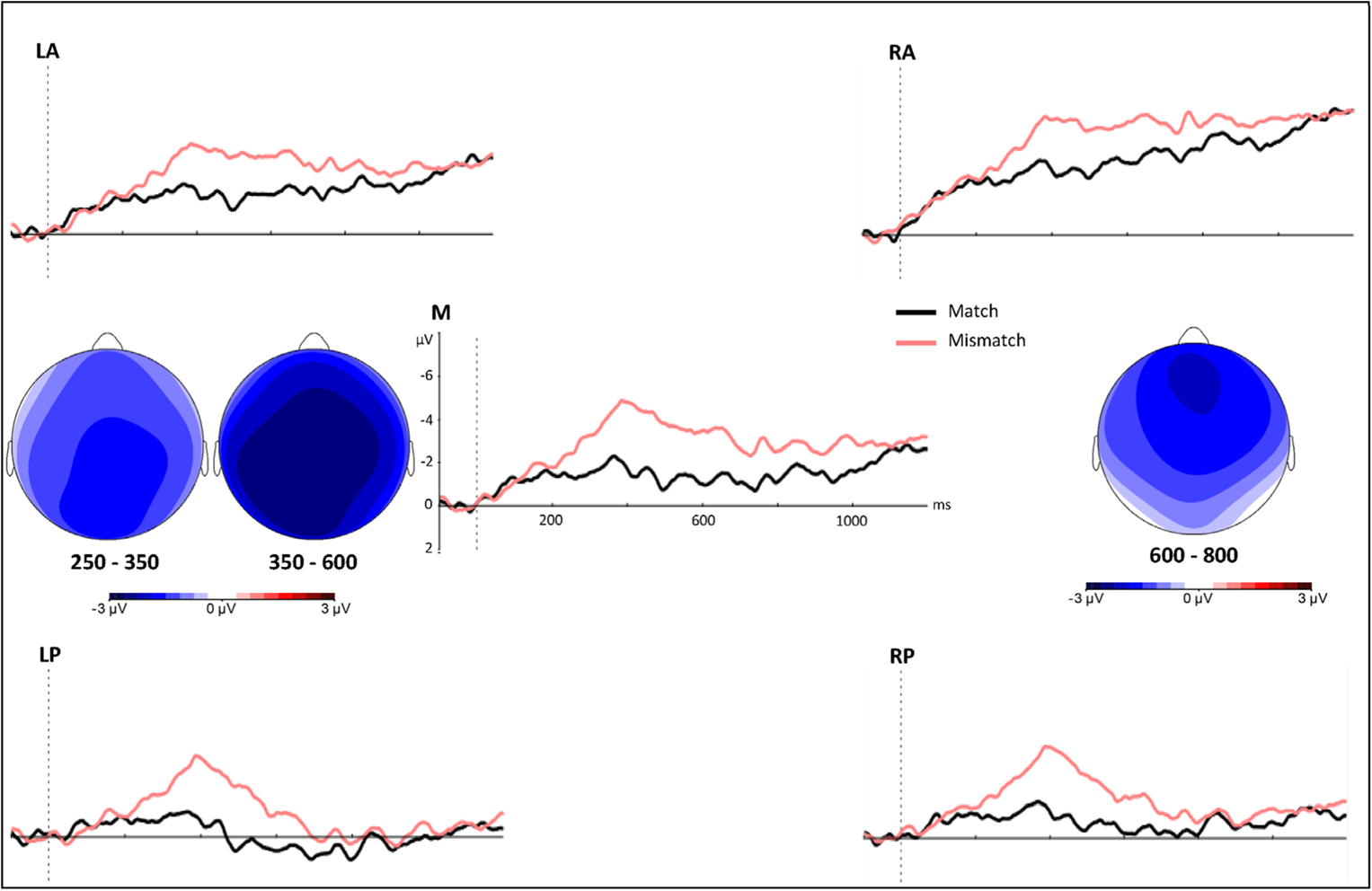

Johanne and others conducted an experiment to validate the combined use of VR and EEG as a tool to study neurophysiological mechanisms of language processing and comprehension. They decided to prove the validity by showing that the N400 response happens similarly in a virtual environment. The N400 refers to an event-related potential (ERP) component that peaks around 400ms after the critical stimuli; the previous study in a traditional setting have found that incongruence between the spoken and visual stimuli will cause enhanced N400. Therefore, the research team set up the situation containing mismatches of verbal and visual stimuli and analyzed brainwave to observe N400 response.

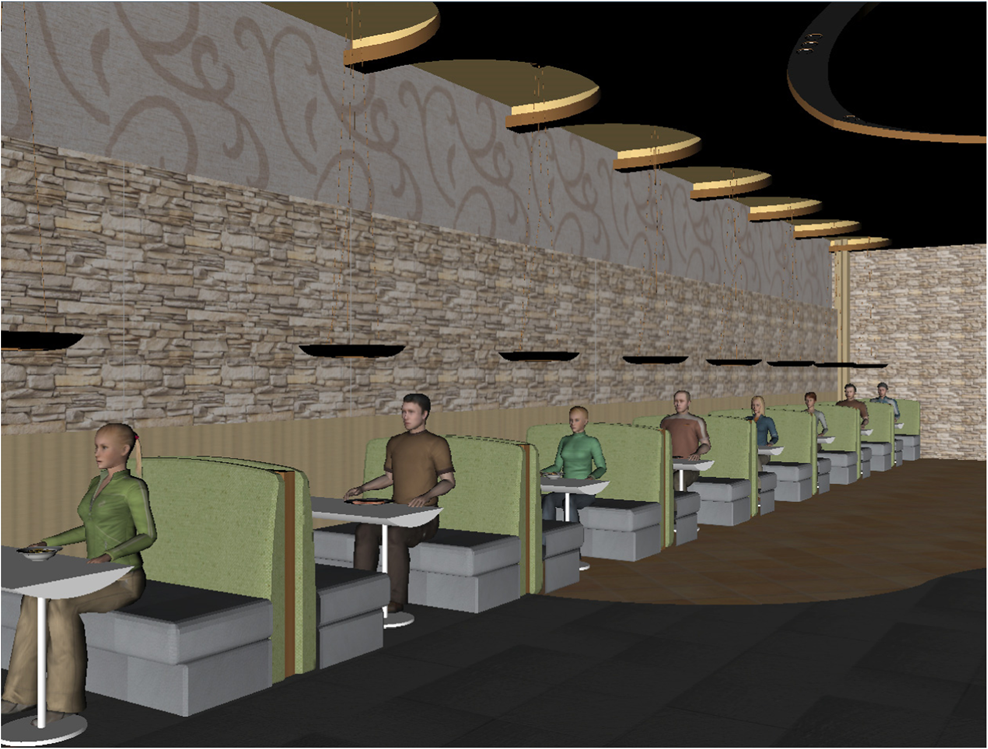

In the experiment, total 25 people were put into the virtual environment designed by Vizard — Virtual Reality Software — where eight tables are in a row with a virtual guest sitting at each table in a virtual restaurant. The participants were moved from a table to table following the preprogrammed procedure. The materials consisted of 80 objects and 96 sentences (80 experimental sentences and 16 filler ones). Both of them were relevant with restaurant setting, but only half of the object and sentence pairs were semantically matched. For instance, if there is a salmon dish on the table, and the virtual guest sitting at the table says “I just ordered this salmon,” it is a well-matched pair. On the other hand, if the paired sentence of a salmon is “I just ordered this pasta,” the two become mismatched. Each of the participants went through equal rate of match and mismatch situations and made 12 rounds through the restaurant during the entire experiment. At the end of the trial, they were asked two questions to assess whether the participants had paid attention during the trial and their perceptions of the virtual agents.

The EEG was recorded from 59 active electrodes during the entire rounds of the experiment. Epochs from 100ms preceding the onset of the critical noun to 1200ms after it was selected and the ERPs were further calculated and

analyzed per participant and condition in three time windows: N400 window (350–600ms), an earlier window (250–350ms) and a later window (600–800ms). Finally, repeated measures of analyses of variance (ANOVAs) were performed, three variables were predetermined time windows, and the factors included condition (match, mismatch), region (vertical midline, left anterior, right anterior, left posterior, left interior), and the electrode.

The result was calculated as Fig. 2; it was revealed that ERPs seem more negative for the mismatch condition than for the match condition in all time windows and the difference was particularly significant during the N400 window. That is to say, the N400 response was observed in line with predictions, while leading to the conviction that VR and EEG combined can be used to study language comprehension.

Remaining problem: The use of two separate devices

Nevertheless, this study still contains shortcomings due to its limitation when using two different devices — the EEG cap and VR helmet — simultaneously. As the head-mount display (HMD) should tightly fit around the user’s head, it is somewhat challenging and burdensome to wear the EEG cap at once. Besides, if equipped with the EEG cap sensitive to movement, it is hard to realize virtual environment with its full potential where people’s dynamic interaction and actions should be taken. In fact, this limitation is a real bottleneck that brings the experiment far apart from setting a realistic environment.

Solution: The All-in-one device with VR compatible sensor

Is there any silver bullet to defy this barrier? The answer is yes. The problem addressed above can be fully solved with the all-in-one device fully equipped with VR compatible sensor. Here is the solution: LooxidVR. Recently winning Best Of Innovation Award at CES 2018, Looxid Labs have introduced its system that integrates two eye-tracking cameras and six EEG brainwave sensors into a phone-based VR headset. With LooxidVR, collecting and analyzing human physiological data concurrently with the users interacting with the fully immersive environment will become possible.

source: AR-VR journey